Master LLaMA-Factory: Fine-Tune LLMs & VLMs Easily

Key Takeaways

- LLaMA-Factory is an open-source framework designed to simplify and unify the fine-tuning of over 100 large language models (LLMs) and vision-language models (VLMs).

- It offers both a user-friendly WebUI (LlamaBoard) for no-code fine-tuning and a robust Command-Line Interface (CLI).

- The framework supports advanced, efficient fine-tuning techniques like LoRA, QLoRA (with various quantization bits), FSDP, and DeepSpeed, optimizing for memory and speed.

- It allows for diverse training approaches, including Supervised Fine-Tuning (SFT), Reward Model Training, and Preference Optimization (DPO, KTO, ORPO).

- LLaMA-Factory provides tools for experiment monitoring, model evaluation, and flexible deployment, including an OpenAI-style API.

Unleash the Power of Custom LLMs: A Beginner’s Guide to LLaMA-Factory

Ever wished you could take a powerful Large Language Model (LLM) and teach it your specific domain knowledge, or even give it a unique personality? Imagine tailoring an AI to understand the nuances of your industry, summarize complex reports exactly how you need them, or even generate code in your preferred style. This process, known as fine-tuning, is like sending an AI back to school for a specialization.

While fine-tuning LLMs can often seem daunting, involving intricate setups and deep machine learning expertise, what if I told you there’s an open-source tool that makes it incredibly accessible? We’re talking about LLaMA-Factory, a game-changer for anyone interested in customizing LLMs and Vision-Language Models (VLMs).

LLaMA-Factory is an open-source framework built to streamline the entire process of training and fine-tuning over 100 different LLMs and VLMs efficiently. It simplifies model adaptation, enabling developers, researchers, and AI enthusiasts to customize AI models for specific tasks without writing extensive code. Ready to dive in? You can find the tool’s source code and contribute to its development on its GitHub repository.

Key Features

LLaMA-Factory isn’t just another tool; it’s a comprehensive workshop for AI model customization. Here are some of its most important features:

- Unified & Extensive Model Support: It serves as a single framework for fine-tuning a vast array of models, including popular ones like LLaMA, Mistral, Mixtral, Qwen, Gemma, DeepSeek, ChatGLM, and even multimodal LLMs like LLaVA.

- User-Friendly Interfaces: Whether you prefer a visual approach or command-line precision, LLaMA-Factory has you covered. It offers both a WebUI (known as LlamaBoard) for no-code fine-tuning and a robust Command-Line Interface (CLI).

- Efficient Fine-Tuning Techniques: To save memory and accelerate training, LLaMA-Factory integrates cutting-edge techniques such as LoRA and QLoRA (supporting 2/3/4/5/6/8-bit quantization), along with FSDP, DeepSpeed, GaLore, DoRA, and more.

- Diverse Training Approaches: Beyond standard Supervised Fine-Tuning (SFT), it supports advanced methods like Reward Model Training, Direct Preference Optimization (DPO), Kahneman-Tversky Optimization (KTO), and Online Reinforcement Preference Optimization (ORPO), giving you flexibility for various learning objectives.

- Hardware Acceleration: Speed up your training with built-in support for FlashAttention-2, Unsloth, and DeepSpeed, enhancing training speed and efficiency.

- Comprehensive Experiment Monitoring: Keep track of your fine-tuning progress with integrated support for LlamaBoard, TensorBoard, Weights & Biases (Wandb), and MLflow.

- Flexible Deployment: Once fine-tuned, you can easily export your models for use with popular inference engines like Hugging Face Transformers, GGUF, vLLM, and even deploy them via an OpenAI-style API.

How to Install/Set Up

Getting started with LLaMA-Factory is straightforward, especially if you have a GPU (which is highly recommended for efficient LLM training!). We’ll use a virtual environment to keep things clean.

Prerequisites:

- Python: Ensure you have Python 3.10 or 3.11 installed.

- Git: For cloning the repository.

- NVIDIA GPU with CUDA Toolkit: Essential for leveraging GPU acceleration during training. We recommend CUDA 12.1 or a compatible version.

Step-by-Step Installation:

- Clone the LLaMA-Factory Repository:

git clone https://github.com/hiyouga/LLaMA-Factory.gitThis command downloads the entire LLaMA-Factory project to your local machine.

- Navigate to the Project Directory:

cd LLaMA-Factory - Install Dependencies:

We’ll use pip to install the necessary Python packages, including PyTorch, bitsandbytes for quantization, and other core components.

pip install -e ".[torch,bitsandbytes]"If you encounter issues or prefer a minimal installation, you can also try:

pip install -r requirements.txtFor optimal performance, especially with multi-GPU setups, you might also want to install DeepSpeed:

pip install deepspeed - Verify Your Installation:

To confirm that LLaMA-Factory is correctly set up and accessible via the command line, run the help command:

llamafactory-cli --helpYou should see usage information printed to your terminal, indicating a successful installation.

How to Use (Usage Examples)

LLaMA-Factory offers two primary ways to fine-tune your models: through its intuitive WebUI or via the command line. Let’s explore both.

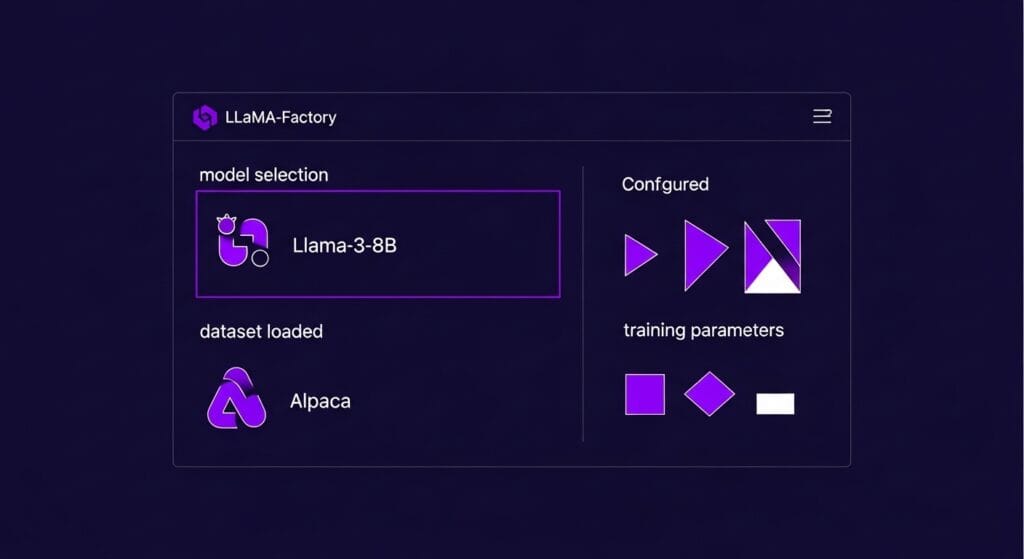

1. Using the WebUI (LlamaBoard)

The LlamaBoard WebUI provides a no-code, graphical interface for fine-tuning, making it perfect for beginners or for quickly experimenting with different settings.

- Launch the WebUI:

From your LLaMA-Factory directory, simply run:

llamafactory-cli webuiThis will start a local web server, and you can access the interface through your web browser (usually at

http://localhost:7860). - Interact with the Interface:

Once in the WebUI, you’ll find tabs for:

- Train: Select your base model (e.g., Llama 3 8B), choose a fine-tuning method (like LoRA or QLoRA), load your dataset (Alpaca or ShareGPT format are common), adjust hyperparameters, and start the training process.

- Evaluate: Assess your fine-tuned model’s performance.

- Chat: Directly interact with your fine-tuned model to test its responses.

- Export: Save your model in various formats for deployment.

2. Using the Command-Line Interface (CLI)

For more control, scripting, or integration into automated workflows, the CLI is your go-to. Here’s a basic example of Supervised Fine-Tuning (SFT):

- Prepare Your Dataset:

LLaMA-Factory supports various dataset formats, including Alpaca and ShareGPT. Your data should typically be in a JSON or JSONL file, structured with ‘instruction’, ‘input’ (optional), and ‘output’ fields.

[

{

"instruction": "Explain the concept of quantum entanglement.",

"input": "",

"output": "Quantum entanglement is a phenomenon where two or more particles become linked in such a way that they share the same fate, regardless of the distance between them. Measuring the state of one entangled particle instantly influences the state of the others."

},

{

"instruction": "Summarize the key benefits of open-source software.",

"input": "",

"output": "Open-source software offers benefits like cost-effectiveness, flexibility, community support, enhanced security through transparency, and rapid innovation due to collaborative development."

}

] - Create a Configuration File (e.g.,

sft_config.yaml):This YAML file defines your training parameters.

# sft_config.yaml

model_name_or_path: "meta-llama/Llama-2-7b-hf" # Or any other supported model

dataset: "your_custom_dataset.json" # Path to your prepared dataset

stage: "sft"

do_train: true

finetuning_type: "lora" # Or "full", "qlora", etc.

lora_target: "q_proj,v_proj"

output_dir: "output_sft_model"

num_train_epochs: 3

per_device_train_batch_size: 4

learning_rate: 2e-4

fp16: true # Use mixed precision for faster training if your GPU supports it

plot_loss: trueNote: Replace

"meta-llama/Llama-2-7b-hf"with the actual model you want to fine-tune. You’ll need to have access to its weights, often via Hugging Face. - Launch Fine-Tuning:

Execute the training process using the CLI and your configuration file.

llamafactory-cli train sft_config.yamlThis command will start the fine-tuning process, displaying progress and metrics in your terminal.

- Inference with Your Fine-Tuned Model:

Once training is complete, you can chat with your model:

llamafactory-cli chat output_sft_modelYou can then type your prompts directly into the terminal to see your model’s responses.

Conclusion

LLaMA-Factory truly democratizes the fine-tuning of large language models and vision-language models. Its ability to simplify complex processes, support a wide range of models and techniques, and offer both graphical and command-line interfaces makes it an invaluable tool for anyone looking to customize AI. Whether you’re aiming to build a specialized chatbot, enhance text generation, improve code completion, or conduct advanced AI research, LLaMA-Factory provides the robust and flexible foundation you need.

Are you excited to experiment with LLaMA-Factory and build your own custom AI applications? The world of engineering and information technology is constantly evolving, and tools like this are at the forefront of innovation. Ready to dive deeper and showcase your own AI creations? Our annual ‘Inov8ing Mures Camp’ is the perfect place to learn, network, and present your groundbreaking projects. Join us to transform your ideas into reality!

Want to explore more about building with LLMs? Check out our other posts like Mastering LangChain: Build Powerful LLM Apps or get started with foundational knowledge from Hugging Face Transformers: A Beginner’s Guide to AI Models. If you’re interested in creating interactive demos for your AI projects, don’t miss our tutorial on Gradio: Build Interactive AI Demos with Python!