Crawl4AI: The Open-Source Web Crawler for LLMs & AI

In the rapidly evolving landscape of artificial intelligence, accessing and processing web data efficiently and ethically is paramount. Large Language Models (LLMs) and AI agents thrive on high-quality, structured information, but the web can be a messy place. This is where tools like Crawl4AI step in, revolutionizing how we gather and prepare data for AI applications.

Key Takeaways

- Crawl4AI is an open-source, LLM-friendly web crawler and scraper designed for AI data pipelines.

- It delivers blazing-fast performance and outputs data in AI-ready formats like clean Markdown and structured JSON.

- Key features include advanced browser control, adaptive crawling, and robust extraction strategies (CSS, XPath, LLM-based).

- Installation is straightforward via pip, with a crucial post-installation setup for browser binaries.

- Crawl4AI simplifies complex web scraping tasks, making it ideal for RAG pipelines, AI agent training, and various data-driven projects.

Introduction: Unlocking the Web for AI with Crawl4AI

As professionals at Inov8ing, we understand the critical role data plays in digital transformation and the successful deployment of AI solutions. For businesses and individuals looking to harness the power of AI, the ability to efficiently gather and process web-based information is a game-changer. This is precisely the problem Crawl4AI solves.

Crawl4AI is an open-source, LLM-friendly web crawler and scraper meticulously engineered for large language models, AI agents, and robust data pipelines. It offers blazing-fast, AI-ready web crawling, transforming the chaotic expanse of the internet into structured, consumable data for your AI applications. Whether you’re building a Retrieval-Augmented Generation (RAG) system, training a custom LLM, or simply need to aggregate web content, Crawl4AI provides the speed, precision, and flexibility you need.

You can explore the full code and documentation for Crawl4AI on its official GitHub repository: https://github.com/unclecode/crawl4ai.

Key Features

Crawl4AI stands out with a suite of features designed to make web data acquisition seamless and powerful:

- LLM-Friendly Output: It automatically converts web content into clean Markdown, structured JSON, or raw HTML, making it perfectly optimized for direct ingestion into LLMs and RAG pipelines.

- Structured Data Extraction: Go beyond raw text. Crawl4AI allows you to parse repeated patterns and extract specific data using traditional CSS/XPath selectors or even advanced LLM-based extraction strategies.

- Advanced Browser Control: Gain fine-grained control over your crawling operations with features like headless mode, custom user agents, proxy support, session management, and the ability to execute arbitrary JavaScript for dynamic content.

- High Performance: Leveraging an asynchronous architecture and parallel crawling capabilities, Crawl4AI delivers exceptional speed, outperforming many traditional and even some paid services.

- Adaptive Web Crawling: A truly intelligent feature, Crawl4AI can determine when sufficient information has been gathered to answer a specific query, stopping the crawl adaptively to reduce unnecessary resource usage and improve efficiency.

- Robots.txt Compliance: Ensures ethical data collection by automatically respecting website crawling rules.

- Multi-Browser Support: Supports various browsers, including Chromium, Firefox, and WebKit, for comprehensive web interaction.

- Comprehensive Content Extraction: Extracts not only text but also internal/external links, images, audio, video, and page metadata, providing a rich dataset.

How to Install/Set Up

Getting started with Crawl4AI is straightforward. We’ll walk you through the basic installation steps using pip, the Python package installer.

Prerequisites:

- Python 3.8+

pip(typically comes with Python)- A terminal or command prompt

Step-by-Step Installation:

Install the Crawl4AI Package: Open your terminal and run the following command to install the latest stable version:

pip install -U crawl4aiThis command installs the asynchronous version of Crawl4AI, which utilizes Playwright for web crawling.

Run Post-Installation Setup: This is a crucial step that installs the necessary Playwright browser binaries (Chromium by default). Execute:

crawl4ai-setupIf you encounter any issues during this step, you can manually install the browser dependencies using:

python -m playwright install --with-deps chromiumVerify Your Installation (Optional but Recommended): To ensure everything is set up correctly, you can use the diagnostic tool:

crawl4ai-doctorYou can also quickly verify with a simple crawl script:

import asyncio

from crawl4ai import AsyncWebCrawler

async def main():

async with AsyncWebCrawler() as crawler:

result = await crawler.arun(url="https://www.example.com")

print(result.markdown[:300]) # Show the first 300 characters of extracted text

if __name__ == "__main__":

asyncio.run(main())

How to Use (Usage Examples)

Let’s dive into some practical examples of how to leverage Crawl4AI for your data extraction needs.

Example 1: Basic Single-Page Crawl and Markdown Extraction

The simplest way to use Crawl4AI is to fetch a single page and extract its content, often formatted as clean Markdown for LLMs.

import asyncio

from crawl4ai import AsyncWebCrawler

async def basic_crawl():

print("\n--- Performing Basic Single-Page Crawl ---")

async with AsyncWebCrawler() as crawler:

url_to_crawl = "https://www.scrapingbee.com/blog/"

result = await crawler.arun(url=url_to_crawl)

if result.success:

print(f"Crawled URL: {result.url}")

print(f"Page Title: {result.metadata.title}")

print("\n--- Extracted Markdown (first 500 chars) ---")

print(result.markdown[:500])

print(f"\nTotal Markdown Word Count: {result.markdown_word_count}")

else:

print(f"Failed to crawl {url_to_crawl}: {result.error_message}")

if __name__ == "__main__":

asyncio.run(basic_crawl())This script initializes an AsyncWebCrawler, navigates to the specified URL, and prints the page’s title and the first 500 characters of its Markdown content.

Example 2: Extracting Structured Data with CSS Selectors

For more specific data extraction, Crawl4AI allows you to define a schema using CSS selectors to pull structured information, perfect for populating databases or feeding into specialized AI models.

import asyncio

import json

from crawl4ai import AsyncWebCrawler, CrawlerRunConfig

from crawl4ai.extraction_strategy import JsonCssExtractionStrategy

async def structured_extraction():

print("\n--- Extracting Structured Data with CSS Selectors ---")

async with AsyncWebCrawler() as crawler:

# Define the schema using CSS selectors

# This example assumes a blog post structure

schema = {

"title": "h1.entry-title",

"author": "span.author-name",

"date": "time.entry-date",

"paragraphs": {

"selector": "div.entry-content p",

"type": "list"

}

}

# For demonstration, we'll use a generic blog post URL

# You would adapt this to your specific target website

url_to_scrape = "https://blog.hubspot.com/marketing/blog-post-template"

config = CrawlerRunConfig(

extraction_strategy=JsonCssExtractionStrategy(schema=schema)

)

result = await crawler.arun(url=url_to_scrape, config=config)

if result.success and result.extracted_content:

print(f"Crawled URL: {result.url}")

print("\n--- Extracted Structured JSON ---")

extracted_data = json.loads(result.extracted_content)

print(json.dumps(extracted_data, indent=2))

else:

print(f"Failed to extract structured data from {url_to_scrape}: {result.error_message}")

if __name__ == "__main__":

asyncio.run(structured_extraction())This example demonstrates how to define a JSON schema with CSS selectors and use JsonCssExtractionStrategy to extract specific elements from a webpage. The output is clean, structured JSON.

Example 3: Deep Crawling Multiple Pages

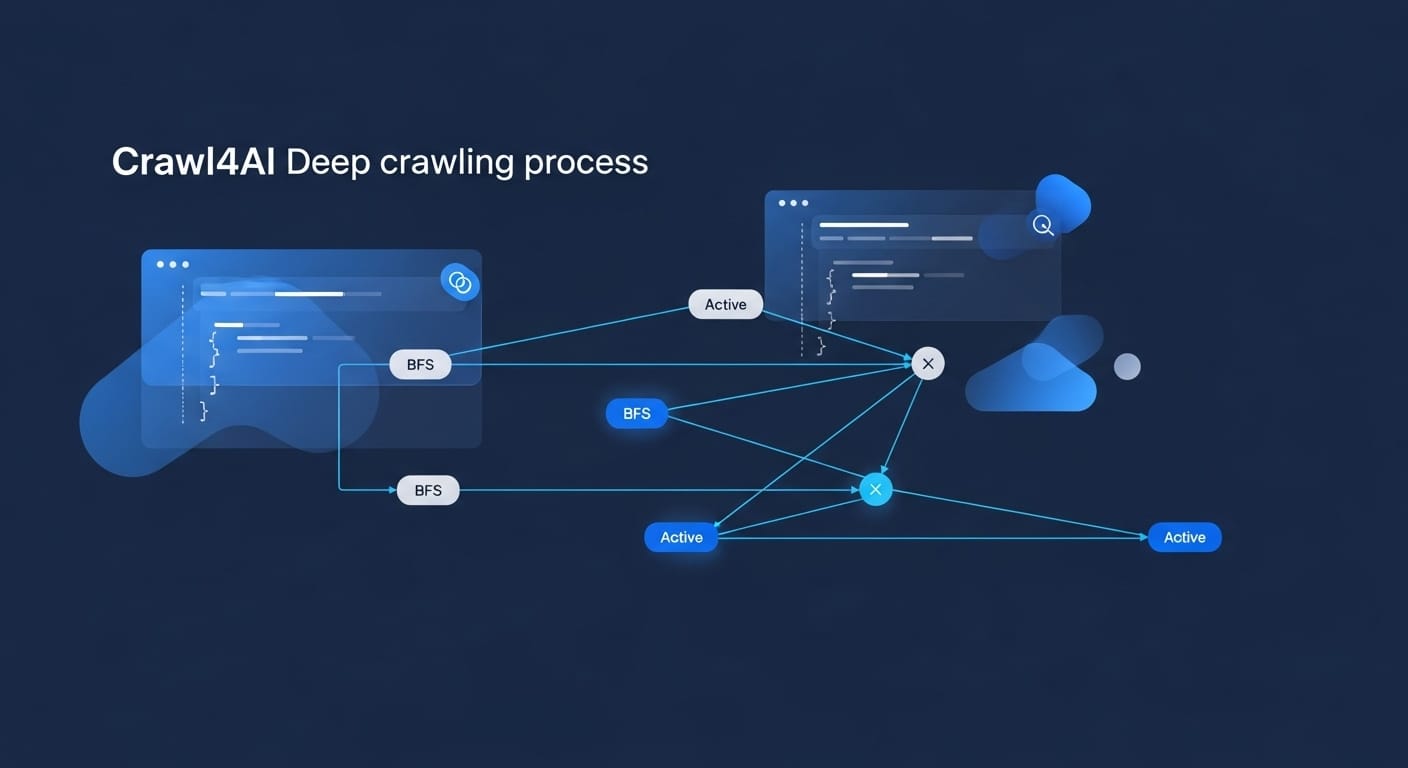

Crawl4AI isn’t just for single pages; it can perform deep crawls, navigating through a website by following links. This is invaluable for building comprehensive datasets.

import asyncio

from crawl4ai import AsyncWebCrawler, CrawlerRunConfig, DeepCrawlConfig

async def deep_crawl_example():

print("\n--- Performing Deep Crawl ---")

async with AsyncWebCrawler() as crawler:

start_url = "https://docs.crawl4ai.com/" # Using Crawl4AI's documentation for a safe example

deep_config = DeepCrawlConfig(

start_url=start_url,

max_depth=1, # Go one link deep from the start URL

max_pages=5, # Limit to 5 pages for this example

strategy="bfs" # Breadth-First Search

)

# Ensure the deep_crawl method is correctly called

# The arun_many method is suitable for handling multiple URLs from a deep crawl

print(f"Starting deep crawl from: {start_url}")

results = await crawler.arun_many(deep_crawl_config=deep_config)

for i, result in enumerate(results):

if result.success:

print(f"\n--- Page {i+1}: {result.url} ---")

print(f"Title: {result.metadata.title}")

print(f"Word Count: {result.markdown_word_count}")

# print(result.markdown[:200]) # Uncomment to see snippet of markdown

else:

print(f"\n--- Failed to crawl page: {result.url} ---")

print(f"Error: {result.error_message}")

if __name__ == "__main__":

asyncio.run(deep_crawl_example())This script sets up a deep crawl starting from the Crawl4AI documentation, limiting the depth and number of pages. It uses a Breadth-First Search (BFS) strategy to discover links.

Conclusion

Crawl4AI emerges as an indispensable tool for anyone serious about leveraging web data for AI. Its open-source nature, coupled with its blazing-fast performance and LLM-friendly output, makes it a powerful asset for developers, researchers, and businesses alike. From building sophisticated LLM applications with frameworks like LangChain to enhancing your Generative Engine Optimization (GEO) strategies, Crawl4AI streamlines the often-complex process of web data acquisition.

By providing clean, structured data, Crawl4AI empowers AI agents to make more informed decisions, LLMs to generate more accurate responses, and data pipelines to run with unparalleled efficiency. We encourage you to integrate Crawl4AI into your projects and experience the transformative power of AI-ready web data. If you’re looking to further optimize your AI integration or need expert guidance on automation and content creation strategies, remember that Inov8ing offers comprehensive AI consultation and automation solutions tailored to your specific needs. Get started for free and unlock the full potential of your digital transformation journey with us!